Human senses are the body's way of perceiving and interacting with the world. The six primary senses or sensory faculties—eye/vision faculty (cakkh-indriya), ear/hearing faculty (sot-indriya), touch/body/sensibility faculty (kāy-indriya), tongue/taste faculty (jivh-indriya), nose/smell faculty (ghān-indriya), and thought/mind faculty (man-indriya)—help us navigate our environment, while additional senses like balance and temperature awareness enhance our perception. These sensory inputs are processed by the brain, shaping our experiences, emotions, and understanding of reality.

Unlike the physical senses, the thought/mind faculty (man-indriya) processes abstract concepts, memories, and emotions, enabling higher cognitive functions such as reasoning, creativity, and self-awareness. It is the core of human intelligence, allowing for introspection, imagination, and ethical decision-making. This cognitive aspect makes human perception unique, as it integrates sensory data with experiences, knowledge, and emotions to create a deep understanding of the world.

While these senses are fundamental to human experience, technological advancements have enabled machines to replicate many of them in various ways. Cameras function as artificial vision, microphones capture sound, tactile sensors detect touch, chemical sensors mimic taste and smell, and gyroscopes provide a sense of balance. These innovations allow machines to perceive and interact with the world in ways increasingly similar to humans.

Motor skills, including fine and gross movements, are closely linked to touch, proprioception (body awareness), and balance. Speech, as a refined motor function, involves intricate coordination of the vocal cords, tongue, and breath, guided by sensory feedback. Machines can mimic these capabilities using robotics for physical movement and speech synthesis for verbal communication, combining sensors, actuators, and AI-driven models to enable dexterous manipulation, fluent speech generation, and expressive voice modulation.

Beyond individual senses, AI is evolving toward multimodal capabilities, where it can integrate multiple sensory inputs—such as combining vision and language understanding—to analyze images, interpret speech, and generate context-aware responses. This enhances human perception and decision-making in fields like healthcare, accessibility, and robotics.

Advancements in AI are also paving the way for higher-order capabilities like reasoning, emotional recognition, and real-time adaptive learning. AI systems can process vast amounts of data, detect patterns, and generate insights that mimic certain aspects of human cognition.

However, AI lacks true consciousness, self-awareness, the deep intuition, and the rich subjective experience derived from the thought/mind faculty. Unlike humans, AI does not possess genuine emotions, ethical judgment, or the ability to reflect on its own existence.

These fundamental gaps highlight the distinction between artificial intelligence and human intelligence. While AI can augment human decision-making and automate complex tasks, it remains limited in replicating the depth of perception, consciousness, and meaningful experiences that arise from the human thought/mind faculty.

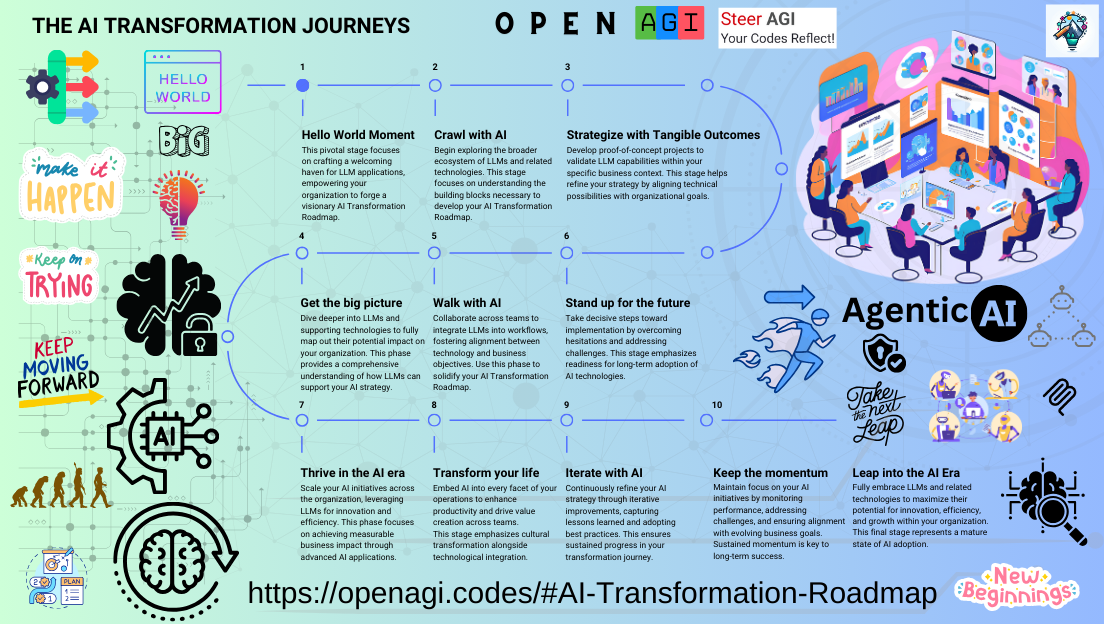

The question of whether artificial intelligence (AI) poses a threat to human existence is complex and multifaceted. While AI offers significant benefits, such as augmenting human capabilities and improving efficiency, it also presents potential risks that warrant careful consideration.

One concern is the potential for AI to surpass human intelligence, leading to scenarios where AI systems operate beyond human control. Experts like Dario Amodei, co-founder and CEO of AI start-up Anthropic, predict that superintelligent AI could emerge as soon as next year, capable of surpassing human intelligence across various fields.

Elon Musk has also expressed concerns about AI, estimating a 20% chance that AI could pose existential risks to humanity. These perspectives underscore the importance of proactive measures to ensure AI development aligns with human values and safety.

To mitigate these risks, it is crucial to establish robust ethical frameworks and regulatory measures that guide AI development and deployment. This includes addressing issues such as data privacy, algorithmic bias, transparency, and accountability. As AI continues to evolve, fostering collaboration among governments, industry leaders, and the public is essential to navigate the challenges and opportunities presented by this transformative technology.

In conclusion, while AI holds immense potential to drive progress and innovation, it is imperative to approach its development with caution and ethical consideration. By implementing responsible practices and policies, we can harness the benefits of AI while safeguarding against potential threats to human existence.

Bill Gates recently stated that while artificial intelligence is transforming many aspects of our work, it won't replace humans in all professions. In his view, AI will significantly enhance efficiency in tasks like disease diagnosis and DNA analysis, yet it lacks the creativity essential for groundbreaking scientific discoveries. According to his comments, three specific professions are likely to remain indispensable in the AI era:

Coders

Although AI can generate code, human programmers are still vital for identifying and correcting errors, refining algorithms, and advancing AI itself. Essentially, AI requires skilled coders to build and continually improve its systems.

Energy Experts

The energy sector is characterized by its intricate systems and strategic decision-making requirements. Gates argues that the field is too complex to be fully automated, necessitating the expertise of human professionals to manage and innovate within this domain.

Biologists

While AI can analyze vast amounts of biological data and assist with tasks like disease diagnosis, it falls short in replicating the intuitive, creative insight required for pioneering scientific research and discovery.

Bill Gates envisions AI as a tool that will augment human capabilities, particularly in professions requiring complex judgment and innovation, such as coding, energy expertise, and biology. Conversely, Elon Musk predicts a future where AI and robotics could render traditional employment obsolete, suggesting that "probably none of us will have a job" as AI provides all goods and services. He introduces the concept of a "universal high income" to support individuals in such a scenario. These differing perspectives highlight the ongoing debate about AI's role in the workforce. While AI's influence is undeniable, many experts believe that human creativity, emotional intelligence, and complex problem-solving abilities will continue to hold significant value, suggesting that AI will serve more as a complement to human labor rather than a wholesale replacement.

The future is not a place to visit, it is a place to create. In the age of AI, while machines may shoulder routine tasks, the true breakthroughs will always be born from human ingenuity. Our future isn't solely about coders, energy experts, or biologists—it's about every professional harnessing technology to amplify their unique strengths. Whether you're a creative, an educator, a healthcare worker, or in any other field, your vision and passion remain irreplaceable. Embrace AI as a powerful tool to elevate your work, and never lose hope in your chosen path. Your journey, like our collective future, is full of promise and possibility.